Stop Deploying AI. Start Designing Intelligence

Stephen Wolfram’s philosophical insights on computation offer actionable principles for designing intelligence environments that achieve lasting value from AI.

Topics

News

- Adani Power Sets Up Nuclear Subsidiary

- Musk Unveils xAI Overhaul, Lunar AI Ambitions

- Former GitHub CEO Dohmke Raises $60 Million to Build AI Code Infrastructure

- Leadership Shakeup Deepens at xAI as Two Co Founders Exit

- India Slashes Social Media Takedown Window to Three Hours

- Cisco Moves to Relieve AI Data Center Gridlock With New Chip

Stephen Wolfram is a physicist-turned-entrepreneur whose pioneering work in cellular automata, computational irreducibility, and symbolic knowledge systems fundamentally reshaped our understanding of complexity. His theoretical breakthroughs led to successful commercial products, Wolfram Alpha and Wolfram Language. Despite his success, the broader business community has largely overlooked these foundational insights. As part of our ongoing “Philosophy Eats AI” exploration—the thesis that foundational philosophical clarity is essential to the future value of intelligent systems—we find that Wolfram’s fundamental insights about computation have distinctly actionable, if underappreciated, uses for leaders overwhelmed by AI capabilities but underwhelmed by AI returns.

Ironically, Wolfram once dismissed philosophy. “If there was one thing I was never going to do when I grew up, it was philosophy,” he said, noting that his mother was an Oxford professor of that very subject. A mathematician at heart, he viewed philosophy as unproductive, as “trying to formalize something messy.” Wolfram’s worldview evolved: His life’s work now offers crucial frameworks for both understanding and applying AI in the real world. His insights aren’t clever academic flourishes; they’re imperatives for building intelligence environments that function effectively at scale.

With Wolfram, we explored the idea that AI leadership must shift from better adopting and integrating AI tools to designing intelligence environments, organizational architectures in which human and artificial agents proactively interact to create strategic value. Three insights from his philosophical approach to computation emerged as fundamental to this design challenge, offering a fresh perspective on why traditional approaches to AI adoption fail and what must replace them.

What Is a Designed Intelligence Environment?

In earlier work, we defined and explored the strategic value of intelligent choice architectures embedded in decision environments. A designed intelligence environment goes further still: It’s an enterprise system where humans and machines not only make decisions but also learn, reason, adapt, and improve how knowledge is generated and shared. These environments are not knowledge graphs. Maps are not territories. A genuine intelligence environment explicitly connects epistemology with execution.

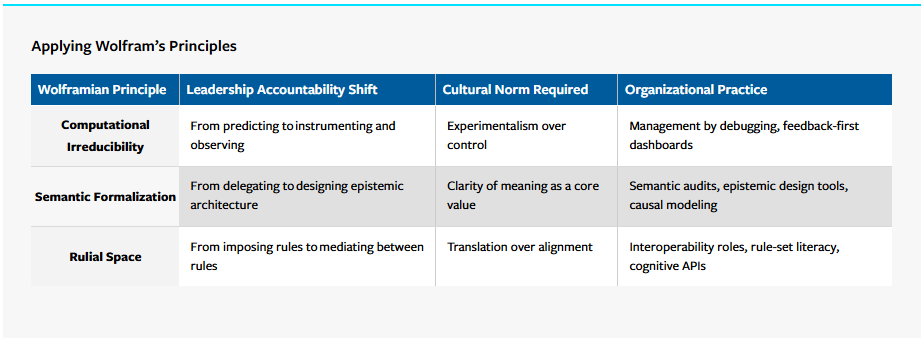

Wolfram’s principle of computational irreducibility reveals that the performance of complex systems cannot be predicted without running them. “You can’t just jump ahead and know what a system will do — you have to run it,” he explained. This makes traditional strategic planning mathematically impossible in AI-rich environments — a constraint with profound implications for how leaders must approach intelligence design. Second, his decades-long project of “taking the knowledge of the world and making it computable” demonstrates that organizational reasoning itself can become programmable, with business concepts defined precisely enough for both human and machine computation. Third, his concept of rulial space shows that different intelligent agents operate under fundamentally different rule sets, requiring what he calls “translation mechanisms” rather than forced alignment. As Wolfram put it, these aren’t just different perspectives on reality — they represent different computational processes generating entirely different ontologies.

Together, these principles transform intelligence environment design from familiar organizational restructuring into what Wolfram would recognize as “epistemic engineering,” the computational formalization of how enterprises think about and structure meaning itself.

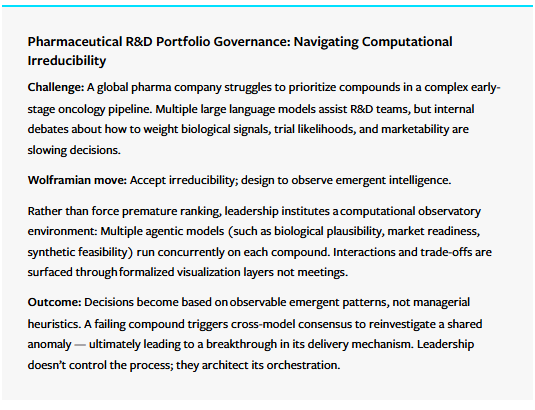

Computational Irreducibility: Why Strategic Planning Is Mathematically Impossible

Wolfram’s computational irreducibility isn’t another argument for agile management — it’s ostensibly a mathematical proof that outcomes from certain highly complex systems are impossible to predict. Unlike previous critiques of command and control, Wolfram’s insights demonstrate that in sufficiently complex environments, no computational method could predict system behavior without running the full simulation. In a business context, this means that for AI-rich enterprises, the traditional strategic planning cycle — predict, plan, execute, and measure — is not just inefficient but mathematically impossible for many organizational behaviors. Just as Arrow’s impossibility theorem means that no social welfare function can accurately represent individual preferences, Wolfram’s computational irreducibility means that no strategic plan can represent the complex array of human, machine, and human-machine behaviors on which its success depends.

The radical business implication: Leaders must architect enterprises as computational experiments rather than execution machines. This means building “runtime intelligence” — systems that generate strategically actionable insights via operations rather than analysis. Unlike “learning organizations” or “adaptive cultures,” this requires specific computational infrastructure: real-time observability systems that capture emergent patterns, experimental frameworks that can run multiple strategies simultaneously, and feedback loops that encode learning into organizational behavior. Organizational agility yields to organizational computability.

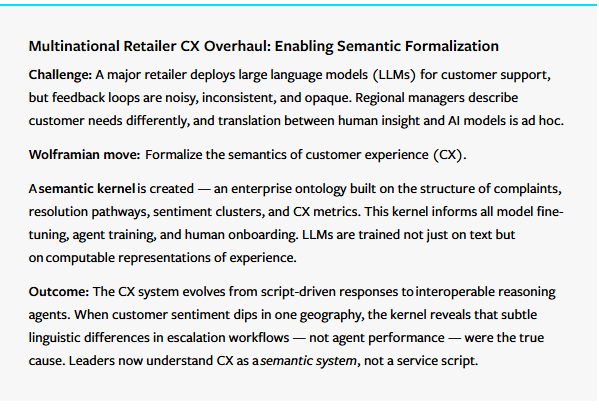

Semantic Formalization: Programming Organizational Reasoning

Wolfram’s notion of semantic formalization goes far beyond data governance or knowledge management — it makes organizational reasoning itself programmable. Legacy approaches emphasize organizing information; semantic formalization treats meaning as executable code. Every business concept — from customer value to operational risk — must be defined with such sufficient precision that both human and AI agents can compute with it. This isn’t about better documentation; it’s about creating what we might call semantic APIs between different reasoning systems. The Wolfram Alpha knowledge engine and the Wolfram Language both serve as living proofs of concept that semantic formalization isn’t primarily theoretical or academic but executable, scalable, and commercially viable.

When humans are not the only source of enterprise intelligence, managing — or more precisely, orchestrating — intelligence becomes the foundation for value creation with AI.

Organizations benefit when they can rapidly reconfigure their reasoning infrastructure. Semantically formalizing concepts like quality or urgency, for instance, enables AI systems to adapt their behavior when business priorities shift. Human teams can operate with consistent definitions across functions and geographies. This creates a new form of organizational leverage: Instead of scaling through process standardization, enterprises scale through reasoning standardization. The result is not just operational efficiency but epistemic efficiency — the ability to think and learn faster than competitors.

When humans are not the only source of enterprise intelligence, managing — or more precisely, orchestrating — intelligence becomes the foundation for value creation with AI.

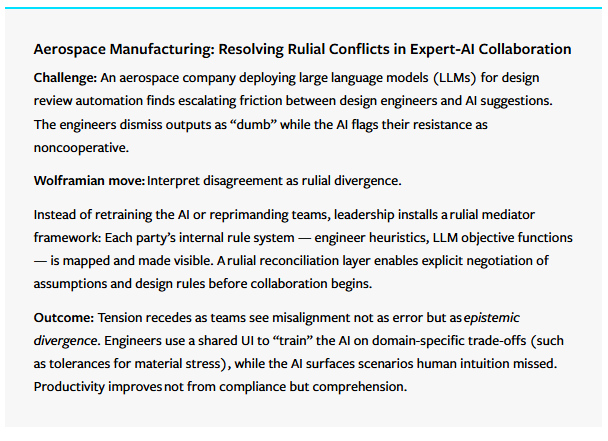

Rulial Space: Translation Between Computational Worldviews

Different intelligences compute different realities. Each department, AI system, and decision maker operates according to different update rules, generating distinct realities from the same inputs. Finance sees risk through Monte Carlo simulations; operations sees it through failure mode analysis; AI systems see it through statistical correlations. These aren’t different perspectives on the same reality — they are different computational processes generating different ontologies. Traditional diversity and inclusion approaches assume alignment is possible through better communication, but rulial space shows this is computationally naive.

The solution requires building what we might call cognitive compilers — translation systems that can convert between different rule sets without losing computational precision. This goes beyond cross-functional teams or shared dashboards to require explicit mapping of how each agent’s internal logic processes information, formal protocols for translating between different reasoning systems, and algorithmic arbitration when different rule sets generate conflicting outputs. Organizations that master rulial engineering can coordinate multiple forms of intelligence — human intuition, AI pattern recognition, algorithmic optimization — without forcing them into artificial consensus. The result is not diversity management but intelligence orchestration. Applying the concept of rulial space reveals that organizational misalignment isn’t inherently a cultural or incentives problem — it’s a computational architecture problem.

Why Smarter Tools Won’t Save Stagnant Enterprises

AI investments are exploding, but enterprise returns remain mediocre. The core issue? Leadership deploys intelligence as if it were automation. But intelligence — human or machine — can’t simply be inserted into workflows; it must be architected into environments. Most organizational designs manage effort and enforce alignment — they do not orchestrate reasoning, learning, or adaptive value creation. This is their AI strategic blind spot. Yet this is precisely where Wolfram’s computational philosophy offers essential and actionable clarity: Unlocking AI’s value requires leaders to ask not what tools can do but what architectures and infrastructures let intelligence emerge, evolve, and flourish.

Organizations that treat intelligence as a designable infrastructure — not as an emergent property of tools — are likely to obtain faster, higher-quality decisions; reduced systemic risk; and enhanced adaptive capacity.

Designing Intelligence Environments

Designing an intelligence environment requires leaders to think like systems architects rather than process managers. The design process begins with mapping the organization’s current intelligence topology — identifying where knowledge is created, how reasoning flows between human and AI agents, and where meaning breaks down or gets lost in translation. Leaders must then make explicit design choices about three foundational elements: the semantic infrastructure (how key concepts will be formally defined and made computable), the reasoning protocols (how different types of intelligence will interact and influence each other), and the learning architecture (how insights will be captured, validated, and propagated throughout the system).

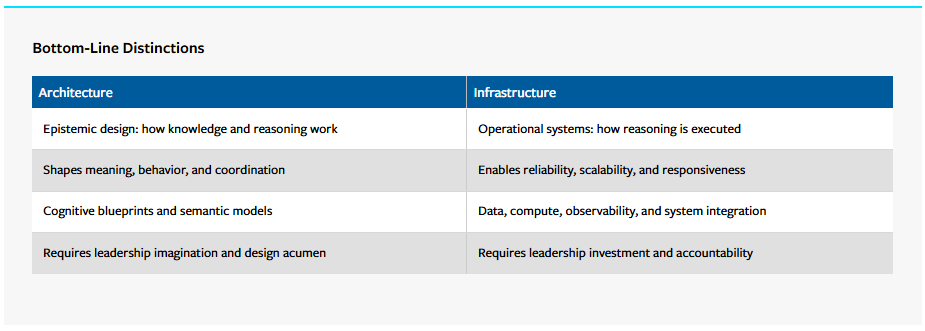

Unlike traditional organizational design that focuses on roles and reporting structures, intelligence environment design focuses on information flows, decision pathways, and knowledge evolution patterns — intelligence architecture and infrastructure. It requires new design tools — semantic modeling, reasoning pathway mapping, and computational experimentation frameworks — that executives have yet to adopt. Architecting intelligence is a fundamentally different task from procuring and adopting it.

A brilliant architecture without infrastructure is impossible to implement. Infrastructure without architecture is a costly, chaotic zoo of tools. Leaders must co-own both. That’s how intelligent systems become trusted, scalable, and transformational.

1. Intelligence Architecture: Design for Meaning and Behavior

Definition: Intelligence architecture refers to the intentional design of how intelligence is structured, distributed, and coordinated across an enterprise. It’s the epistemic blueprint — the models, rules, and relationships that govern how knowledge is created, shared, reasoned with, and evolved.

Wolframian root: This aligns with rulial space and semantic formalization — where differing rule sets (reasoning systems) and representational semantics must be coherently reconciled. Architecture is where cognitive interoperability gets designed.

Executive role: Leaders govern architecture when they decide:

. How AI and human decisions interact.

. Where to embed feedback loops.

. Which semantics, frames, or ontologies get formalized and standardized.

. What constitutes “valid” knowledge in key domains.

Analogy: Think of architecture as urban planning for intelligence — it defines the zoning, the rules, the cultural logic, and the allowed flows of traffic (reasoning) and commerce (decision-making).

2. Intelligence Infrastructure: Capability for Execution and Scale

Definition: Intelligence infrastructure is the technical and operational foundation that allows intelligence architectures to be realized, maintained, scaled, and secured. It includes tools, systems, APIs, platforms, pipelines, data stores, and compute environments.

Wolframian root: This is where computational irreducibility lives. You run the system; you observe behavior. Infrastructure is what lets you run your AI-human ensemble and collect auditable traces of its reasoning.

Executive role: Leaders oversee infrastructure when they:

. Fund observability and experimentation platforms.

. Invest in data interoperability and model transparency.

. Integrate AI reasoning logs into enterprise risk management.

. Ensure alignment between back-end systems and front-line decision agents.

Analogy: If architecture is urban planning, infrastructure is roads, sewers, electric grids, and data networks — the literal capability to make the plan work at scale.

Leadership’s New Role: Architects of Intelligence

Wolfram’s lessons go beyond optimizing AI investment; they encourage leaders to build intelligence architectures that help enterprises reason better, learn faster, and proactively adapt faster than competitors.

The organizations extracting maximum value from AI will have leaders architecting intelligence environments rather than deploying the latest technologies. They will design how teams collaborate with AI systems, structure decision-making harnessing both human insight and machine processing, and build feedback loops that continuously sharpen collective performance. Organizational design, not technology rollout, determines the magnitude of value creation with AI.

The choice is binary: Intentionally architect organizational intelligence, or accept the limits of purely human cognition. Embrace epistemic design as a leadership imperative, or let the future be decided by black-box drift. Organizations grasping this reality — that intentional cognitive design trumps cost-effective technology acquisition — will outmaneuver those on the endless hunt for ever-smarter software. Only conceptual clarity transforms intelligence from expensive complexity into competitive edges. Philosophy doesn’t merely inform AI strategy; it gives strategy meaning, purpose, and measurable alignment.