The Perils of Algorithmic Pricing

Some revenue management systems based on algorithms may lead to unintended collusion and antitrust violations.

News

- Adani Power Sets Up Nuclear Subsidiary

- Musk Unveils xAI Overhaul, Lunar AI Ambitions

- Former GitHub CEO Dohmke Raises $60 Million to Build AI Code Infrastructure

- Leadership Shakeup Deepens at xAI as Two Co Founders Exit

- India Slashes Social Media Takedown Window to Three Hours

- Cisco Moves to Relieve AI Data Center Gridlock With New Chip

For decades, hotels, airlines, casinos, and other companies have used revenue management systems to help them set prices, maximize revenues, and gain competitive advantage. Now, in a series of legal cases, plaintiffs have argued that some of those systems’ pricing algorithms could be used to facilitate illegal price-fixing in violation of federal antitrust law.

Typically, collusion over pricing requires explicit coordination among competitors, the kind one might imagine occurring in the stereotypical smoke-filled room. What makes the recent lawsuits worth paying attention to is the idea, expressed by federal regulators, that the use of pricing algorithms can lead to collusion without such overt agreements — and even if companies didn’t intend to collude. If this view of collusion prevails, it could pave the way for even more antitrust lawsuits over algorithmic pricing.

In this article, we want to highlight the possible antitrust risks posed by algorithmic pricing and provide an analytical framework for understanding how the algorithms use data and guide pricing decisions in ways that can lead to illegal collusion.

The Legal Cases Alleging Collusion

Over the past few years, pricing algorithms have become the target of several class-action lawsuits alleging that the systems enable users to collude illegally in setting prices. In 2023, plaintiffs brought suit against casino hotels in Las Vegas and Atlantic City, New Jersey, and their revenue management (RM) system vendor, Cendyn Group, whose Rainmaker algorithm is used to set prices and control inventory.1 More recently, plaintiffs filed suit against large hotel brands and SAS Institute subsidiary Integrated Decisions and Systems (IDeaS), whose software is deployed at more than 30,000 hotels around the world.2 The pending suits allege that the hotels, by delegating pricing to the shared algorithm, implicitly colluded to set prices that were higher than would be found in a truly competitive market.

Two algorithm vendors serving the multifamily housing market — RealPage and Yardi Systems — face similar class-action antitrust lawsuits. In October, 27 property firms agreed to pay a total of $141 million to settle one class action (RealPage and other landlords remain defendants).3 RealPage is also the subject of a civil antitrust suit filed by the U.S. Department of Justice and attorneys general in eight states. The government complaint argues that landlords fed proprietary, nonpublic data into a centralized pricing algorithm that used the data to recommend rental rates that landlords adopt, often automatically. In addition, the government alleges that RealPage sought to reduce competition and monopolize the market for RM software used by apartment landlords.4 The DOJ has settled with some large landlords who were customers of RealPage, requiring them to stop using the RM software.

Further lawsuits have been brought against two technology companies, Amadeus IT Group and CoStar Group, that provide the data used by the pricing algorithms5 Plaintiffs in these cases accuse the companies and their hotel clients of implicitly colluding to set prices through a give-to-get agreement: Hotels share performance data for past and, in some cases, future stay dates, and in return receive partially aggregated data about their competitors. Both cases have been dismissed.

As the adoption of AI and algorithmic pricing systems grows, the risk of collusion between companies increases.

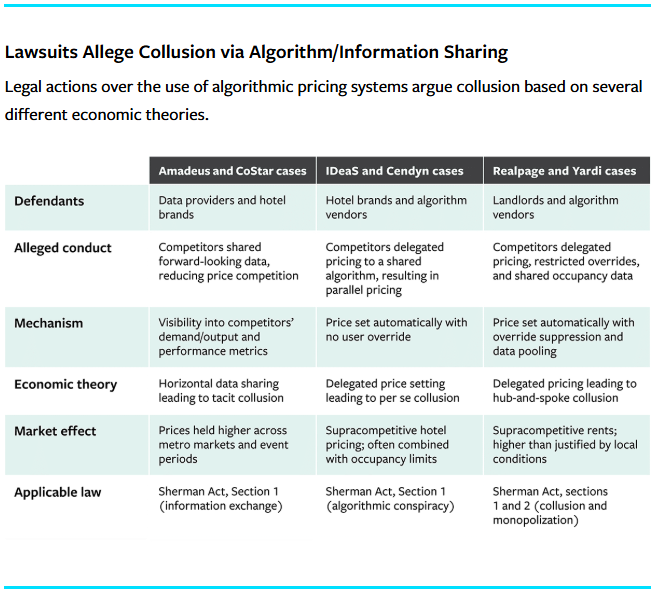

Several of the cases are on appeal or have been refiled with amended arguments. Meanwhile, the complaints have been bolstered by the intervention in early 2024 by the U.S. Federal Trade Commission and the DOJ. In a statement of interest filed in the casino hotels case, the agencies argued that the use of pricing algorithms can constitute per se collusion and be a violation of antitrust law even if competitors don’t communicate directly about prices.6 Further, they contended that collusion can exist if the algorithms only recommend prices that need to be approved by hotel operators or landlords. Simply delegating pricing decisions to a common algorithm can amount to concerted action and price-fixing, the agencies maintained. (See “Lawsuits Allege Collusion via Algorithm/Information Sharing” for a summary of key aspects of these cases.)

The agencies’ position has been reinforced by the filing of legislation that is currently pending in the U.S. Congress. U.S. Sen. Amy Klobuchar (D-Minnesota) has introduced the Preventing Algorithmic Collusion Act, which would prohibit pricing algorithms from using nonpublic competitor data to recommend or set prices in a way that could facilitate collusion. It also creates a presumption for antitrust violations when pricing algorithms are used to set or stabilize prices, even if there is no explicit agreement to do so.7

Meanwhile, California recently amended its antitrust statute to regulate the use or distribution of “common pricing algorithms.” The attention from politicians and regulators sends a powerful warning about the potential risks companies can face when using algorithmic pricing.

A Brief Price Collusion Primer

The Sherman Antitrust Act was passed in 1890 to promote competition and prevent monopolies. Section 1 makes it illegal for businesses to engage in agreements that restrict competition, while Section 2 prohibits efforts to monopolize a market. The cases we’ve been discussing mainly allege violations of Section 1, claiming that pricing algorithm vendors and their clients collude in setting prices either by sharing nonpublic information or by implicitly coordinating prices. In addition, the DOJ case against RealPage alleges monopoly practices in violation of Section 2.

The basis for the cases is a type of coordination among competitors known as hub-and-spoke collusion. In antitrust law, hub-and-spoke collusion occurs when companies engage in anticompetitive behavior, such as price-fixing, with the help of a central third party. The plaintiffs’ argument is that the companies that provide pricing algorithms or collect and share nonpublic data act as the hub, and the spokes are the individual hotel chains or apartment landlords.

The most common form of price-fixing involves a group of companies that get together to set prices, a practice known as explicit collusion. However, illegal price-fixing may also occur more indirectly as tacit collusion when companies adopt similar pricing strategies without direct communication. As the FTC and DOJ statements indicate, companies that rely on pricing algorithms may practice intentional, explicit collusion or take actions that unintentionally result in the tacit coordination of prices and reduced competition even if there’s no explicit agreement between the companies involved.

How Pricing Algorithms Can Lead to Collusion

Revenue management systems have been around since the 1970s, when they were adopted by recently deregulated airlines to maximize the value of their limited and perishable inventory of seats. Similar systems were soon used by the hospitality and car rental industries. Since then, RM has expanded and now covers a broad range of industries, and the systems have become powered by more and more sophisticated algorithms for forecasting demand and setting optimal prices. Where RM systems were originally employed by individual companies looking for an advantage over competitors, many current RM systems are used by multiple — often competing — companies, creating the potential for collusive behavior.

Whether a pricing algorithm can lead to collusive behavior hinges on two questions: Does the algorithm base pricing decisions only on a company’s internal data and publicly available data from its competitors, or does it also include competitors’ private proprietary information? And are the decisions made in a centralized or decentralized fashion?

In answering these questions, it’s useful to consider three main factors: the software platform, data, and mathematical modeling.

The software platform integrates the data and models and deploys pricing recommendations, and the way it’s integrated across companies may affect whether it facilitates collusion. The software platform can act in a hub-and-spoke fashion, collecting data from its customers and providing centralized directions for how to set prices. In such a scenario, courts could conclude that this is a form of explicit collusion, since competitors may adopt similar, or at least coordinated, prices. This forms the basis of plaintiffs’ allegations in the RealPage and Yardi cases.

In the IDeaS and Cendyn cases, the plaintiffs have argued that a software platform may also facilitate tacit collusion even if pricing decisions are made in a decentralized way, without direct coordination among competitors. What matters here is whether the software relies strictly on a company’s private, internal data in making its recommendations or uses nonpublic, proprietary information.

Many current revenue management systems are used by multiple — often competing — companies, creating the potential for collusive behavior.

Here, the distinction between public and private data is crucial. If a company relies on only its internal information, little or no danger of collusion exists. When a company has access to competitors’ private, proprietary data, the information may improve its ability to forecast demand or allow it to anticipate competitors’ actions. This can lead companies to set prices higher than they would if they were acting without knowledge of competitors’ pricing choices.

Mathematical modeling within pricing algorithms performs two functions: It forecasts market demand based on a given set of prices and other variables, and then it recommends the optimal prices to maximize expected revenue based on a given demand forecast.

For example, a model might forecast demand by analyzing how the prices of a company and its competitors affected past sales and then estimate expected future demand for any combination of prices in the market. These predictions can be improved as more public and private data is shared across companies.8 However, predicting demand is of value only if it enables businesses to set better — and, ideally, the best — prices. To do that, the model uses an optimization framework, which recommends the prices that will result in the most revenue.

When analyzing competitive markets, an optimization model typically aims to converge toward a Nash equilibrium — a situation in which no single competitor has an incentive to set prices higher or lower than the equilibrium price. Although this can result in prices that are roughly aligned around the equilibrium price, it is unlikely to lead to collusion as long as all parties set their prices independently based on publicly available data, such as observable market prices.

The dynamic changes when the optimization model incorporates nonpublic data, such as proprietary demand and capacity information. If this information signals a likely surge in demand or anticipated scarcity, the algorithm may recommend higher prices than would occur in a strictly competitive market. When companies using pricing algorithms set prices above the equilibrium, it can be a sign of tacit collusion.

Avoiding Algorithmic Collusion

As the adoption of AI and algorithmic pricing systems grows, the risk of collusion between companies — even if only the tacit variety — increases.9 While this article’s intent is to provide information about legal risks, not to offer legal advice, we believe that the following three strategies may help companies steer clear of implicit collusion.

Ensure data transparency. Companies should make it clear what type of data their algorithm is and isn’t using to make its recommendations. This means disclosing that their algorithmic pricing is based only on available inventory and publicly available information about competitors’ prices, and that the model seeks to optimize prices based on achieving a competitive equilibrium. By limiting or eliminating the sharing of nonpublic, sensitive information — such as competitors’ future demand forecasts — companies can prevent unintended price alignment. Specifically, it is essential to avoid platforms that pool competitors’ data and provide centralized pricing decisions.

Avoid naive rule-based pricing. Many companies employ simple rule-based approaches when setting prices, but research indicates that simple rules, such as matching or undercutting competitors’ prices, can unintentionally stabilize pricing above competitive levels.10 Empirical work on online markets suggests that simply the speed at which an algorithm responds to competitors’ price moves can drive convergence. A well-known example of an algorithm-driven price war on Amazon over a used developmental biology book that drove its price to nearly $24 million in 10 days illustrates the dangers of pricing rules that are too simple.

Companies should avoid rules that automatically link their pricing decisions to competitors’ prices. Automated price-matching or undercutting rules, though seemingly efficient, may lead to parallel pricing, even without any formal agreement to match prices. Instead, companies should design and use algorithms that adjust prices based on realized demand or market conditions, not exclusively on competitors’ prices. This reduces the risk of price pegging and promotes independent decision-making.

Place checks on algorithms. Companies should limit automatic price-acceptance features and ensure that managers retain oversight of an algorithm’s price recommendations and posted prices. Encouraging manual overrides and careful evaluation of algorithmic price adjustments can reduce the risk of implicit collusion by keeping pricing decisions under human control.

This view was reinforced by the U.S. Court of Appeals for the 9th Circuit’s recent affirmation in Gibson v. Cendyn, which held that absent an actual agreement, the parallel adoption of the same pricing algorithm is not itself collusion.12 The ruling underscores that the antitrust peril lies less in the use of firm-specific and publicly observable data, and more in the incorporation of proprietary, competitor-specific information and/or use of centralized algorithms.

References

1. Cornish-Adebiyi v. Caesars Entertainment, Inc., No. 1:23-cv-02536 (D. N.J., filed 2023); and Gibson v. Cendyn Group, LLC, No. 2:23-cv-00140 (D. Nev., filed Jan. 25, 2023).

2. Gonzalez v. IDeaS Revenue Solutions, Inc., No. 1:24-cv-08262 (N.D. Ill. filed 2024); Shattuck v. SAS Institute Inc., No. 3:24-cv-03424 (N.D. Cal., filed 2024); and Au v. IDeaS Revenue Solutions, Inc., No. 1:24-cv-06324 (N.D. Ill., filed 2024).

3. In re: RealPage, Inc., Rental Software Antitrust Litigation (No. II), No. 3:23-md-03071 (M.D. Tenn., filed 2023); Duffy v. Yardi Systems, Inc., No. 2:23-cv-01391 (W.D. Wash., filed 2023); and Mach v. Yardi Systems, Inc., No. 24-CV-063117 (Cal. Super. Ct., Alameda County, filed 2024).

4. United States v. RealPage, Inc., No. 1:24-cv-00710 (M.D. N.C., filed Aug. 23, 2024).

5. Segal v. Amadeus IT Group, S.A., No. 1:24-cv-01783 (N.D. Ill., third amended complaint, April 28, 2025); and Portillo v. CoStar Group, Inc., No. 2:24-cv-00229 (W.D. Wash., filed Feb. 20, 2024).

6. Y. Cheng, “Statement of Interest of the United States (Case No. 1:23-cv-02536),” U.S. Department of Justice, 2022.

7. Preventing Algorithmic Collusion Act of 2025, S. 232, 119th Cong. (2025-2026), www.congress.gov/bill/119th-congress/senate-bill/232/text.

8. An important issue is to continuously stay updated and not succumb to algorithmic inertia. See V.L. Glaser, O. Omidvar, and M. Safavi, “Predictive Models Can Lose the Plot. Here’s How to Keep Them on Track,” MIT Sloan Management Review 64, no. 4 (summer 2023): 20-25.

9. J. Birkinshaw, “Will AI Disrupt Your Business? Key Questions to Ask,” MIT Sloan Management Review 66, no. 4 (summer 2025): 50-56.

10. Z. Brown and A. MacKay, “Are Online Prices Higher Because of Pricing Algorithms?” Brookings Institution, July 7, 2022, www .brookings.edu.

11. J.M. Meylahn and A.V. den Boer, “Learning to Collude in a Pricing Duopoly,” Manufacturing & Service Operations Management 24, no. 5 (September-October 2022): 2577-2594, https://doi.org/10.1287/msom.2021.1074.

12. Gibson v. Cendyn Grp., LLC, No. 24 -3576, slip op. at 5-6 (9th Cir. Aug. 15, 2025).