The Three Obstacles Slowing Responsible AI

Many organizations commit to principles of AI ethics but struggle to incorporate them into practice. Here’s how to bridge that gap.

News

- Adani Power Sets Up Nuclear Subsidiary

- Musk Unveils xAI Overhaul, Lunar AI Ambitions

- Former GitHub CEO Dohmke Raises $60 Million to Build AI Code Infrastructure

- Leadership Shakeup Deepens at xAI as Two Co Founders Exit

- India Slashes Social Media Takedown Window to Three Hours

- Cisco Moves to Relieve AI Data Center Gridlock With New Chip

In October 2023, New York City released its AI action plan, publicly committing to the responsible and transparent use of artificial intelligence. The plan included guiding principles — accountability, fairness, transparency — and the creation of a new role to oversee their responsible implementation: the algorithm management and policy officer.

But by early 2024, New York’s AI ambitions were under scrutiny. It turned out that a chatbot deployed to provide regulatory guidance to small-business owners was prone to giving misleading advice. Reports revealed that the system was misinforming users about labor laws and licensing requirements and occasionally suggesting actions that could lead to regulatory violations.1 Observers questioned not only the technical accuracy of the system but also the city’s governance protocols, oversight mechanisms, and deployment processes. The episode became a cautionary tale, not only for public institutions but for any organization deploying AI tools at scale.

This case represents just one example of a broader pattern. Across industries, companies have embraced the language of responsible AI (RAI), emphasizing fairness, accountability, and transparency. Yet implementation often lags far behind ambition as AI systems continue to produce biased outcomes, defy interpretability and explainability requirements, and trigger backlash among users.

In response, regulators have introduced a wave of new policies, including the European Union’s AI Act, Canada’s Artificial Intelligence and Data Act, and South Korea’s updated AI regulations — all placing new pressures on organizations to operationalize transparency, safety, and human oversight.

Still, even among companies that understand the potential hazards, progress remains uneven, and they risk embedding error or bias into their processes or committing unexpected and possibly serious ethical violations at scale.

Mind the RAI Gaps

Through interviews with over 20 AI leaders, ethics officers, and senior executives across several industries, including technology, financial services, health care, and the public sector, we explored the internal dynamics that shape RAI initiatives. We found that in some cases, RAI frameworks serve as nothing more than reputational window dressing and organizations simply lack commitment to operationalizing recommended practices. But we also uncovered structural and cultural obstacles that can prevent organizations from translating their principles into sustainable practices. In particular, we identified three recurring gaps; below, we explore them and propose a set of practical strategies for bridging them.

1. The accountability gap. Of all the challenges that organizations face with respect to implementing RAI, one remains the most stubborn: accountability. Even when companies publish principles for the ethical use of AI, few define who is responsible for embedding them in day-to-day work, and even fewer establish how that responsibility should be exercised in practice. The result is that responsibility is widely shared but rarely owned, and good intentions aren’t backed by clear processes.

This disconnect often begins with how ethical principles are introduced. As one RAI adviser noted, “Companies define principles based on what they’re already doing. It legitimizes current practices. It doesn’t ask them to change much.” In these cases, principles create a sense of achieving progress without the systems or structures needed to make them actionable.

Even when intentions are sincere, and concrete policies are available, implementation stalls in the absence of defined accountability processes. Who signs off on fairness reviews? Who verifies explainability claims? What happens when concerns are raised? In most cases, the processes are ad hoc. “We don’t have structured training or audits,” said one compliance officer, “just a checklist that engineers and compliance teams review. There’s no independent verification.”

This patchwork approach means that critical responsibilities often fall through the cracks. Fairness assessments are sometimes run by the same teams building the models. Biases embedded in historical data go unaddressed. “We don’t yet have a structured way to fix them,” a financial executive acknowledged, “and no one is really accountable for doing it.”

In practice, resourcing RAI seems to be an afterthought for many companies.

Without clear ownership, accountability for execution, and a repeatable, end-to-end process for oversight, RAI efforts drift toward symbolic compliance. Policies are published, values declared, and teams reminded to “be ethical” — but without dedicated governance, there is no systematic follow-through.

2. The strategy gap. Despite widespread commitments, AI ethics often remain disconnected from how value is created or how success is measured. This disconnect defines what we call the strategy gap: the failure to integrate AI ethical considerations into the commercial and operational logic of the organization. We found that RAI principles often don’t inform core decisions about product development, market strategy, and resource allocation.

Too often, RAI efforts are located within compliance, privacy, or risk departments — functions that tend to operate downstream from product and business decisions. “It’s still viewed as adjacent,” one RAI lead observed. “We’re consulted only after the product is built, not while it’s being designed.”

In organizations that reward rapid innovation, ethics can feel like a speed bump. One legal adviser recounted several pilots that stalled not because they failed technically but because they raised difficult questions about potential misuse. “The teams were excited about the tech but didn’t realize the product might not align with the company’s values,” she explained. “No one had that conversation upfront.” This underscores the need to take ethical considerations into account when making strategic decisions, not as an afterthought downstream.

When RAI steps are built into workflows, it is clear who is accountable for getting them done.

Organizations that make a strategic priority of increasing efficiency may find that goal at odds with RAI initiatives that seek to optimize solutions for justice or fairness. As a result, RAI often gets framed as a constraint on innovation rather than a catalyst for increasing customer trust, improving quality, building long-term resilience, or meeting other strategic objectives. When ethical principles are not structurally connected to strategy, product decisions lack guardrails, and governance programs lose influence.

3. The resource gap. Even where there was genuine commitment to ethical AI practices, we found that mismatches between what organizations aspired to do and what their structures, staffing, and tools actually supported were widespread. “It’s still very much driven by people who care,” one adviser noted. “There’s no organizational muscle behind it yet.”

In practice, resourcing RAI seems to be an afterthought for many companies. “We don’t have structured training or procedures,” one data leader said. “We have a checklist of all the things we’re supposed to do, but it’s more about reasonable compliance than meaningful change.”

Fairness reviews are a case in point. Although technically feasible, they are rarely resourced properly. Interviewees told us that their AI teams often lacked access to staff members trained in bias mitigation — and even when those resources were available, the teams often had neither the capacity nor the political leverage to slow or stop a project based on ethical concerns. Others described well-meaning but underresourced governance boards that faded into irrelevance due to competing demands. “Unless someone’s full time on this,” an AI policy lead explained, “it becomes background noise.”

Technology, too, has proved to be a mixed bag. A data scientist at a financial institution described having access to an open-source fairness toolkit that provided neither integration into their workflow nor guidance on when and how to use it. “So we don’t,” they admitted.

Ultimately, organizations are underinvesting in the people, processes, and infrastructure required to make RAI operational. Without dedicated roles, comprehensive training, proper evaluative tools, and incentives that support ethical diligence, RAI remains what one leader called “a well-intentioned aspiration” — prominent in principle but absent in practice.

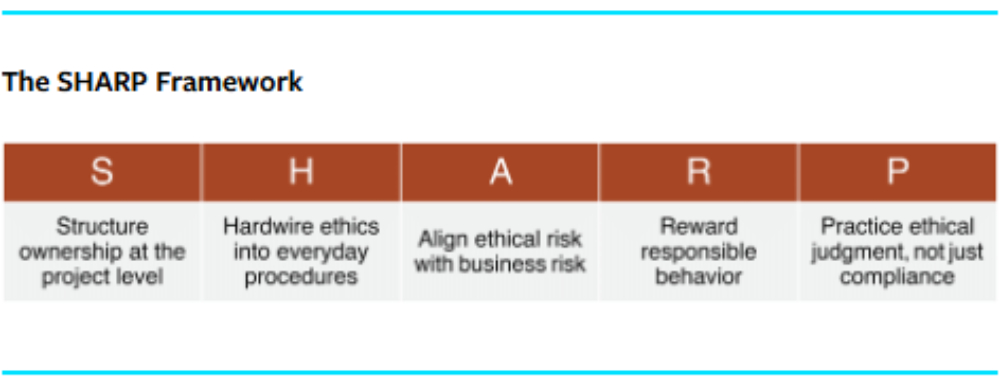

The SHARP Framework for Moving From RAI Principles to Practice

Although the gap between RAI aspirations and practice is wide, it can be narrowed. Across industries, some organizations are successfully embedding ethics into the operational core of how AI is developed and deployed. Those doing so are using the following five strategies, which together form the acronym SHARP:

- Structure ownership at the project level.

- Hardwire ethics into everyday procedures.

- Align ethical risk with business risk.

- Reward responsible behavior.

- Practice ethical judgment, not just compliance.

SHARP gets to the heart of the structural and cultural obstacles to RAI. Its component strategies are not linear steps or universal templates. Rather, they reflect the varied ways that organizations can reconfigure their structures, incentives, and routines to support RAI in practice. Let’s look at each strategy in detail.

Structure ownership at the project level. A clear point of accountability on individual projects helps make ethical oversight consistent and systematic, not ad hoc. Research on AI-augmented systems has shown that users often relinquish agency when presented with algorithmic output.2 This underscores the criticality of having formal accountability structures within the organization during the development and deployment of such systems.

Several companies have addressed this by assigning an RAI lead to each high-impact AI initiative. Rather than placing oversight solely within the legal or compliance functions, they embed these roles within development teams. The teams’ mandate is to surface ethical risks early, create documentation, and serve as a link between product decisions and organizational values.

A global manufacturing company offers a concrete example. Its digital ethics team operates across the organization, supporting all digital initiatives and holding influence over decision-making beyond just symbolic gestures. One leader described their position this way: “I connect with people all across the business, to be the voice of business ethics, ensuring our values actively guide decisions on digital initiatives.” The fact that the ethics lead has an open door reflects how seriously the company takes its values, and it creates space for ethical reflection and dialogue at every level. These RAI leads are not external to the process — they help shape it. Their access and integration allow them to intervene early, ask difficult questions, and influence outcomes.

Similarly, at a financial services company, the introduction of project-level ethics leads created greater clarity and engagement across teams. “If it’s everyone’s job, it’s no one’s job,” said the firm’s AI governance lead. Naming a specific individual gave teams a clear point of contact and responsibility: someone tasked with identifying ethical risks early and elevating them when needed. This avoided the all-too-common diffusion of responsibility, where everyone assumes that someone else is looking out for potential harms. The approach meant that RAI leads weren’t isolated voices and were instead embedded in processes that connected technical teams with compliance, legal, and governance functions. As a result, ethical concerns were surfaced earlier and became part of formal decision-making processes rather than being raised too late or overlooked entirely.

Having the authority to impose such requirements enables RAI leads to enforce responsible AI practices. This strategy reflects a broader organizational shift: moving from symbolic, high-level declarations to embedded roles that are positioned and able to act.

Hardwire ethics into everyday processes. Several organizations have begun to formalize ethical practices in their product development life cycles, but this effort must go beyond checklists. Tools such as the Ethical Matrix and Explainable Fairness frameworks are great to start with, but, as RAI experts emphasize, ethical auditing must include an assessment of affected communities and context-specific harm evaluation.3 This reinforces the need for human oversight mechanisms that go beyond compliance checklists and engage stakeholders in meaningful review.

For example, at one health care analytics company, teams codeveloped an ethics checklist that must be completed before any AI model reaches production. It includes requirements for validation on training data, stakeholder consultation, and documentation of foreseeable harms. Importantly, this checklist is built into the DevOps pipeline, much like automated tests or deployment protocols. “We didn’t want it to be a hurdle,” the company’s engineering lead said. “It had to feel like something we do — not something someone else makes us do.”

When RAI steps are built into workflows, it is also clear who is accountable for getting them done. Standard workflows also make it easier to track what decisions were made and why.

Align ethical risk with business risk. In many companies, RAI remains disconnected from core business metrics. For RAI efforts to gain traction, ethical risks must be reframed in terms that resonate with executive decision makers: reputation, regulatory exposure, and operational disruption.

One European insurance company made this shift by modeling the potential financial and reputational impacts of algorithmic bias being exposed in the media. Using real-world examples, the risk team showed how an AI-related failure could trigger cascading costs — from client churn to brand damage. “Once we showed the downstream risks,” the executive told us, “the conversation changed.”

Another organization began reporting RAI indicators as part of its broader enterprise risk dashboards, alongside cybersecurity and operational integrity. This repositioned AI ethics from a values issue to a material risk management concern.

The impact was not just increased attention but also earlier involvement. Product teams began anticipating ethical risks as part of their own accountability structures rather than waiting for downstream review.

Reward responsible behavior. If managers truly want AI developers to incorporate ethical considerations into their work, they must explicitly incentivize such behavior. This is especially so given that such efforts are often in tension with other desired behaviors, such as working fast or meeting a release deadline.

A Nordic energy company addressed this by adding an “impact awareness” dimension to performance reviews for AI and data teams. Data scientists were recognized for engaging with fairness audits, improving transparency, or elevating ethical trade-offs during model development and deployment. “It’s not about docking points,” the vice president of data told us. “It’s about signaling what we value.”

Similarly, a European telecommunications firm created an internal award for responsible innovation to highlight teams that made tough ethical decisions, such as shelving a promising model due to fairness concerns or prioritizing transparency over performance.

These changes may seem modest, but they send a critical message: Responsible behavior is visible, valued, and tied to professional success.

Practice ethical judgment, not just compliance. Few processes can entirely replace the need for human judgment, especially in contexts where ethical tensions are subtle or evolving. So, to support their training initiatives, several organizations have created forums where employees can build ethical fluency through practice and reflections.

One public agency introduced recurring “AI ethics labs” that involved facilitated sessions for cross-functional teams to review real use cases and work through ethical dilemmas. The labs were intentionally informal, designed less to produce decisions than to develop a vocabulary, shared reasoning, and confidence in raising concerns. At a logistics firm, sprint retrospectives were adapted to include rotating prompts related to data use, consent, or unintended consequences. These brief discussions helped normalize ethical inquiry within agile workflows.

In both cases, the organizations depended heavily on AI-enabled decision-making processes, so their objective was to make sure their users would not turn into passive recipients of system suggestions but instead remain active moral agents. As RAI researchers have warned, overreliance on AI-based systems may crowd out habits of ethical reflection.4 Without the intentional reinforcement of ethical reasoning through practice, even well-designed systems can degrade individual competence over time.

At both organizations, the goal was not compliance — it was improving people’s capacity for making ethical judgments. Decision-making improves when teams are not just equipped with checklists but also have the opportunity to work through complex issues together.

What It Takes to Close the Responsible AI Gap

Well-designed principles alone will not ensure the broad adoption of RAI. Our research suggests that the primary barrier is not a lack of commitment or awareness. Instead, it is the difficulty of translating abstract principles into practice within complex, resource-constrained environments. In this context, RAI cannot be reduced to just a technical or ethical challenge; it becomes an organizational one. It requires organizations to build the operational infrastructure (people, processes, incentives, and routines) that make ethical intent sustainable over time.

The organizations making meaningful headway are not those with the most aspirational values statements. Progress depends less on perfect solutions and more on building better defaults — habits, structures, and feedback loops that nudge teams toward more responsible decisions. Organizations that invest in structured accountability, align incentives with ethical impact, and create space for cross-functional teams to reason through complex trade-offs together are leading the way. These companies are embedding ethical thinking into day-to-day operations.

Executive leadership is central to this work — not as the sole owner of RAI but as the enabler of the cultural and structural conditions required for it to take root. As we’ve seen, responsible AI is not a checklist. It is a long-term capability that tests an organization’s alignment, adaptability, and willingness to lead through ambiguity.

The most urgent question is no longer “What do we believe about AI?” but “What are we doing, systematically and consistently, to act on those beliefs?”