Microsoft Rolls Out Maia 200 AI Chip to Cut Reliance on Nvidia

New in-house processor targets inference costs as cloud providers grapple with rising AI demand and tight Nvidia supply.

Topics

News

- Microsoft Rolls Out Maia 200 AI Chip to Cut Reliance on Nvidia

- Google.org Funds 12 AI Projects to Accelerate Scientific Research

- MIT Sloan Management Review India, IDfy to Release Study on Data Privacy

- India’s AI Summit to Draw World’s Top Tech Leaders This February

- Startups Seek Tax Clarity After SC Ruling Against Tiger Global

- Tata Vows $11 Billion for Innovation City Near Navi Mumbai Airport

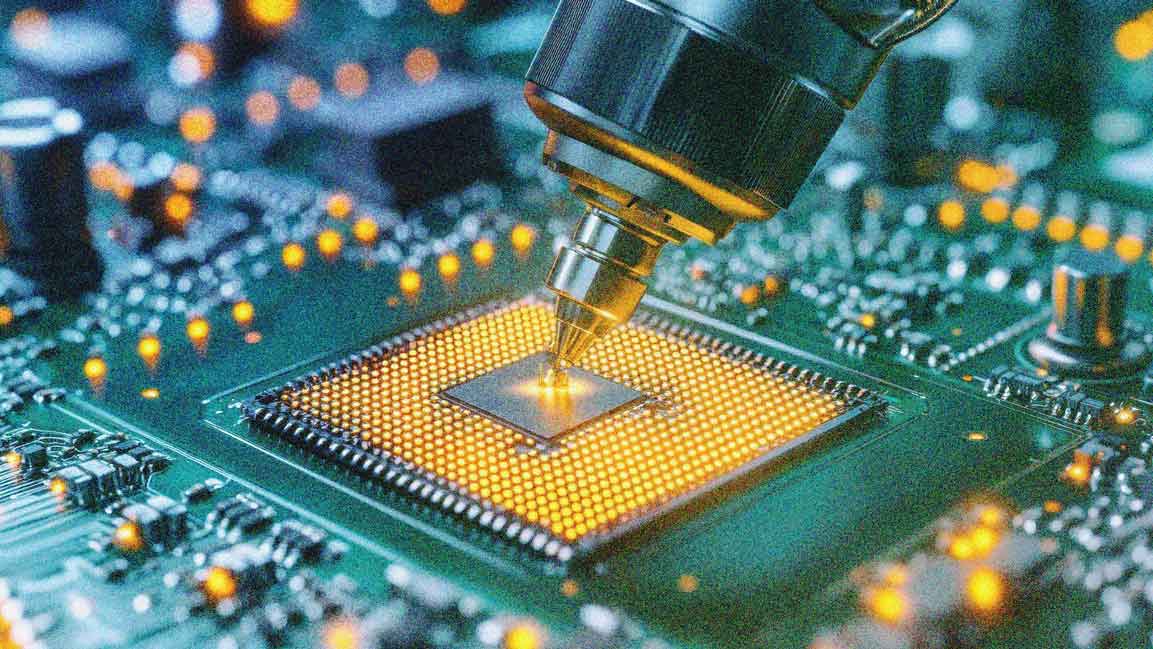

[Image source: Pankaj Kirdatt/MITSMR India]

Microsoft Corp. has begun deploying its second-generation artificial intelligence chip, Maia 200, in select cloud data centers as part of a broader push to boost efficiency for large AI models and cut dependence on Nvidia hardware.

The Maia 200 chip is designed primarily for AI inference, or the process of running trained models to generate responses, and Microsoft says it delivers better performance per dollar than the hardware currently in its fleet, helping curb one of the biggest operational costs of AI services.

The first Maia 200 units are operational in Microsoft’s US Central data center region near Des Moines, Iowa, with deployments planned next in the US West 3 region near Phoenix, Arizona, and other locations to follow, the company said.

Built by Taiwan Semiconductor Manufacturing Co. (TSMC) using an advanced 3-nanometre fabrication process, Maia 200 features a redesigned memory subsystem with high-bandwidth memory and substantial on-chip SRAM to keep large models fed with data efficiently.

Microsoft describes it as the most efficient inference accelerator it has deployed and says it supports running today’s largest AI models while leaving room for future workloads.

Microsoft said the new chip will support multiple AI models across its cloud infrastructure, including OpenAI models used in Microsoft services such as Copilot. The company’s internal superintelligence team will also use Maia 200 for synthetic data generation and reinforcement learning to improve future in-house models.

“Maia 200 is part of our heterogeneous AI infrastructure and will serve multiple models,” Scott Guthrie, Microsoft’s Executive Vice-President for cloud and AI, said in a blog post.

“It can effortlessly run today’s largest models, with plenty of headroom for even bigger models in the future,” Guthrie said.

The chip rollout comes as cloud providers face rising demand for AI computing and continued supply constraints for advanced processors from Nvidia. These pressures have pushed major tech companies to invest more heavily in their own silicon development. Amazon and Google have already deployed custom AI chips to support their cloud services.

Microsoft has positioned Maia 200 against rival custom AI silicon from other hyperscalers. It said Maia 200 delivers roughly three times the 4-bit performance of Amazon’s Trainium chips and competitive 8-bit performance against Google’s TPUs, while improving token-generation economics by about 30% per dollar compared with its existing infrastructure.

At the systems level, Microsoft said Maia 200 uses standard Ethernet networking rather than proprietary interconnects, a design choice the company says helps reduce costs and power use while scaling across large clusters of accelerators.

The Maia 200 is currently available to a limited group of developers, researchers and AI labs through Microsoft’s software development kit, with broader use expected as deployments expand across its cloud and AI platforms.